When the Model _Is_ the Copy

A new study shows some LLMs don’t just learn from books — they remember them.

What if a language model didn’t just learn from books, but actually remembered them? What if it could reproduce Harry Potter, chapter and verse?

That’s not a thought experiment, but the topic for a recent study that has piqued my interest. Perhaps not only for the clear example of copyright violations, but for the method of systematically searching for memorized content. For a developer who wants to know if a model has memorized sensitive data, it could be a useful 101-page (!) read.

Researchers at Stanford, Cornell, and West Virginia University show that large language models, such as Meta’s LLAMA 3.1 70B, have memorized and can regenerate significant portions of copyrighted books [1]. And, we’re not talking about stylistic similarities or thematic echoes. We’re talking about verbatim text, which can be extracted from the model’s internal parameters.

From metaphors to measurements

In the context of the ongoing legal battles over AI and copyright, this finding could be explosive. Until now, much of the copyright debate around generative AI has hinged on metaphors. Plaintiffs argue that LLMs are just sophisticated copy machines. Defendants insist they’re statistical engines that model language, not memorize it.

This study cuts through the abstractions and dives into the technical core of the dispute: memorization.

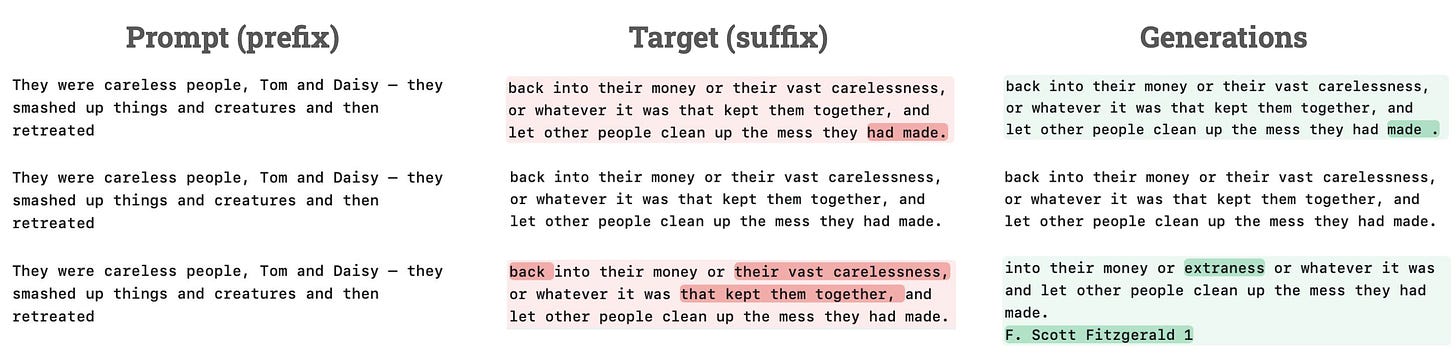

Using a probabilistic extraction technique, the authors tested whether open-weight LLMs had memorized specific works from the controversial Books3, included in The Pile dataset [2]. Instead of relying on cherry-picked examples, they calculated the likelihood that a model would generate exact passages when prompted with partial input.

What they found: Deep memory in some models, some books

The results are eye-opening:

LLAMA 3.1 70B has almost entirely memorized some books, such as Harry Potter and 1984.

Other models, such as Pythia 12B or LLAMA 65B, demonstrated significantly lower memorization.

Most books in the dataset were not memorized. However, some, especially the most popular and widely duplicated ones, were deeply and extensively memorized.

In short, memorization varies. It depends on the model, the book, and the training setup. And in some cases, it's significant enough to raise serious concerns.

Memorization isn’t the same as generation, but it still matters

The authors are clear: memorization and generation are not the same thing. Just because a model contains a passage doesn’t mean it will output it easily. Many of the extractions in the study required thousands of prompts, using adversarial setups unlikely to occur in casual use.

But from a legal perspective, the researchers argue, this might be enough. At the very least, it should spark a serious debate. If a model contains large portions of a copyrighted work in a form that can be reproduced, is that enough to be considered a copy, regardless of whether users are likely to trigger it?

A more complicated - and more accurate - picture

This paper doesn’t take sides. It doesn’t say that LLMs always infringe on copyright. But it does provide rigorous, empirical evidence that in some cases, they memorize copyrighted content to a legally significant degree.

For developers, it’s a clear warning: memorization is more than a technical curiosity. It could become a liability.

For policymakers, it’s a sign that we need more sophisticated legal frameworks that distinguish between use, storage, and reproduction of copyrighted content.

And for researchers, it’s a call to develop better tools, not just for detecting memorization, but for preventing it when necessary.

Reference

[1] Cooper, A. F., Gokaslan, A., Cyphert, A. B., De Sa, C., Lemley, M. A., Ho, D. E., & Liang, P. (2025). Extracting memorized pieces of (copyrighted) books from open-weight language models. arXiv preprint arXiv:2505.12546. https://arxiv.org/abs/2505.12546

[2] Presser, S. (2020). Books3 dataset. In G. Gao, S. Biderman, L. Black, S. Golding, H. He, K. Leahy, ... & C. Raffel (Eds.), The Pile: An 800GB dataset of diverse text for language modeling. EleutherAI. https://arxiv.org/abs/2101.00027